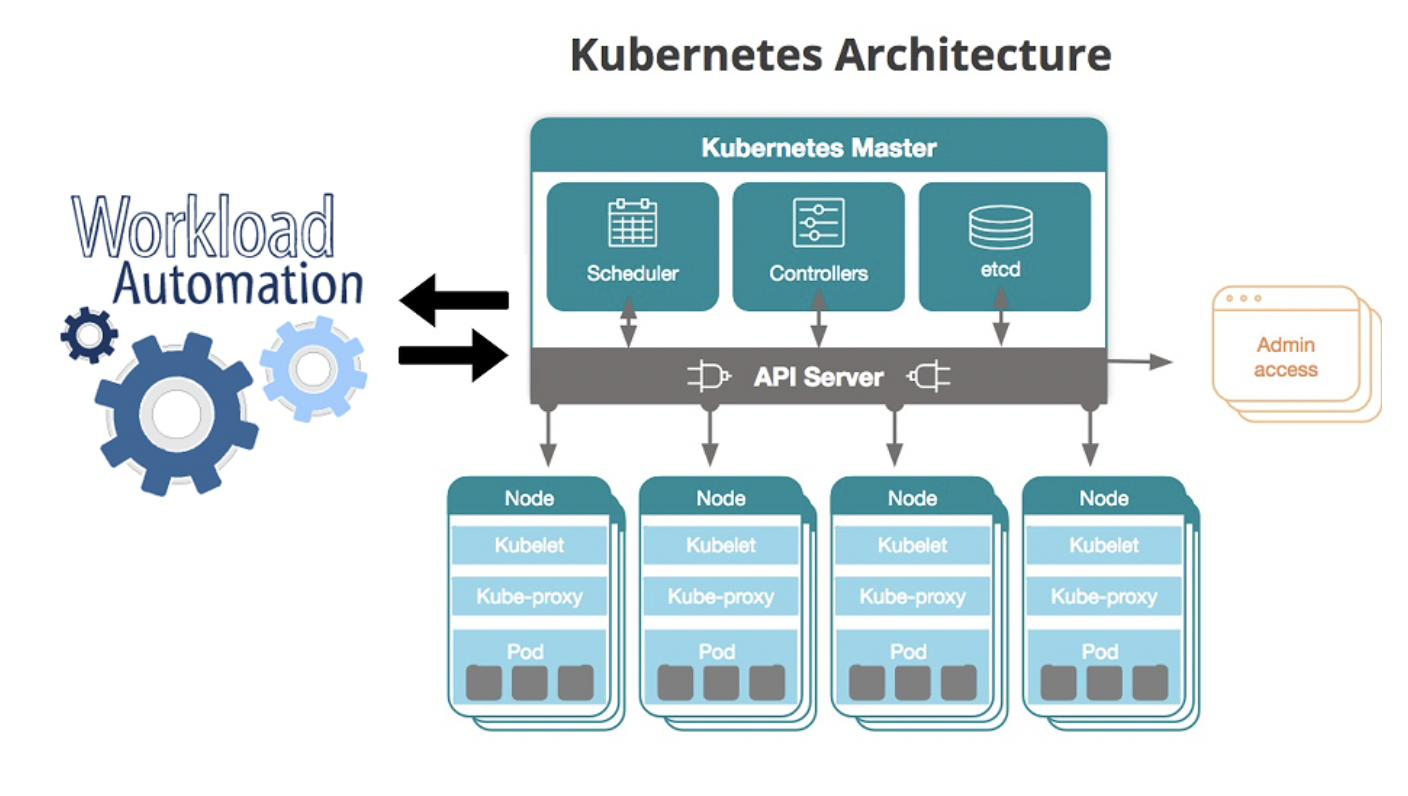

Nowadays, industries move towards microservice and cloud native architectures that makes use of containers to scale and deploy applications. But containers have its own challenges and adds complexity to the infrastructure to maintain especially in large dynamic environments (if you are not familiar with containers and Kubernetes don’t worry: catch up with our latest post, Containers and Workload Automation 101).

Are your applications built using containers—microservices packaged with their dependencies and configurations? And are you looking for a solution to manage and deploy these containers efficiently?

Here is the prominent tool Kubernetes or k8s for short to perform container orchestration (automating the deployment, management, scaling and availability of containers).

And what if you need to orchestrate Kubernetes jobs in a logical sequence, with jobs that depend on other job, facing some challenges to resolve this issue? Should the operations team be well versed with Kubernetes?

No apprehensions, here is the one stop solution “Kubernetes plugin” through Workload Automation.

Here is the prominent tool Kubernetes or k8s for short to perform container orchestration (automating the deployment, management, scaling and availability of containers).

And what if you need to orchestrate Kubernetes jobs in a logical sequence, with jobs that depend on other job, facing some challenges to resolve this issue? Should the operations team be well versed with Kubernetes?

No apprehensions, here is the one stop solution “Kubernetes plugin” through Workload Automation.

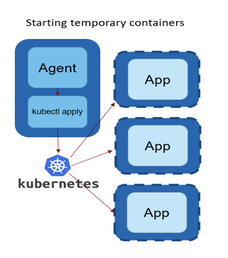

| Design your workload to maximize the benefits of elastic scaling provided out-of-the-box by Kubernetes new grid computing, which is now available in Workload Automation to submit, monitor, retrieve the logs of the k8’s job and cleans the resources used in the cluster to run a job, as shown by the following picture: |

Let’s begin:

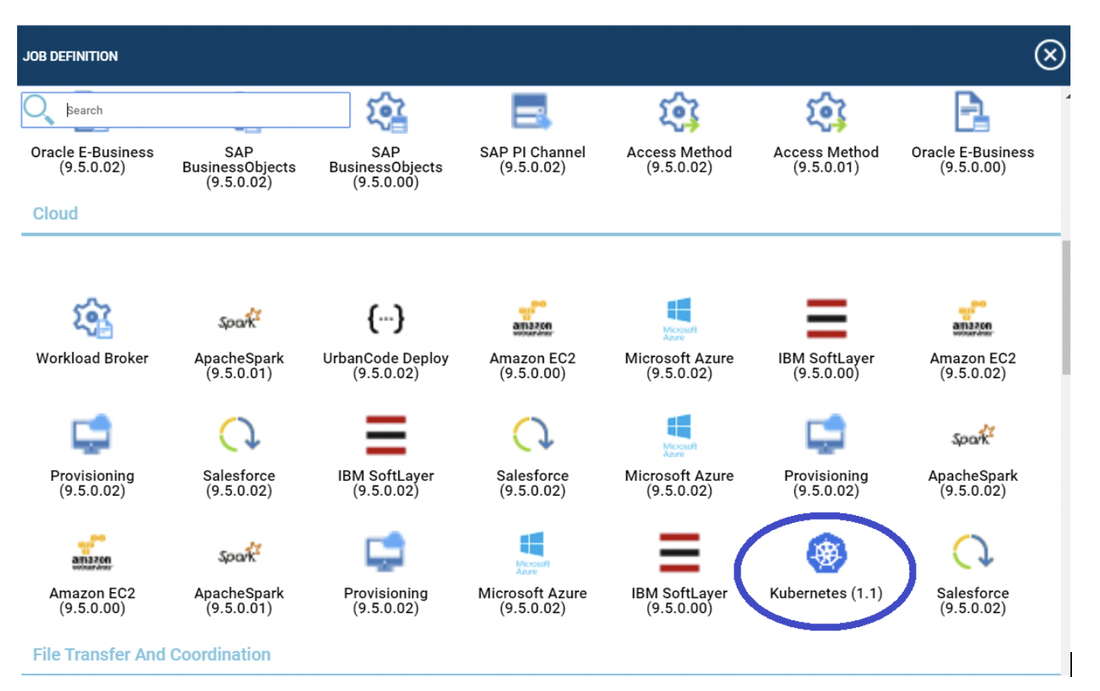

First of all, log in to the Dynamic Workload Console and open the Workload Designer. Choose to create a new job and select “Kubernetes” job type in the Cloud section

First of all, log in to the Dynamic Workload Console and open the Workload Designer. Choose to create a new job and select “Kubernetes” job type in the Cloud section

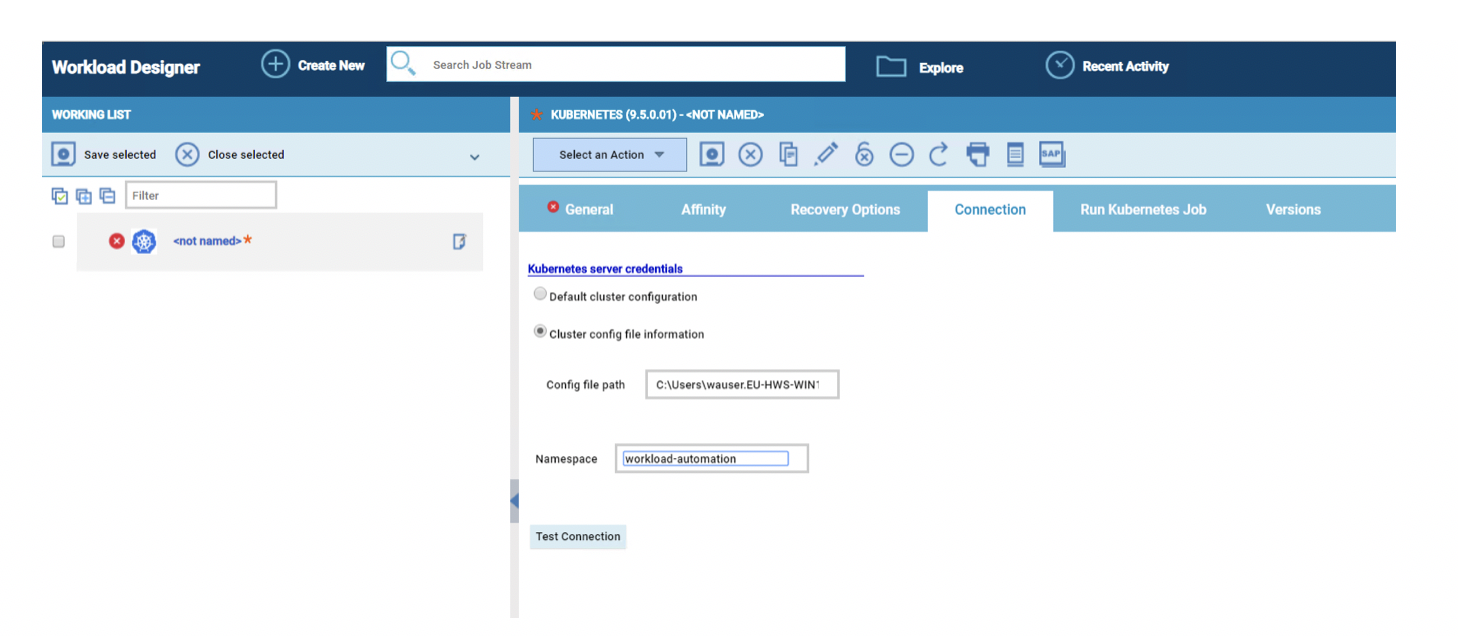

Establishing connection to the Kubernetes cluster:

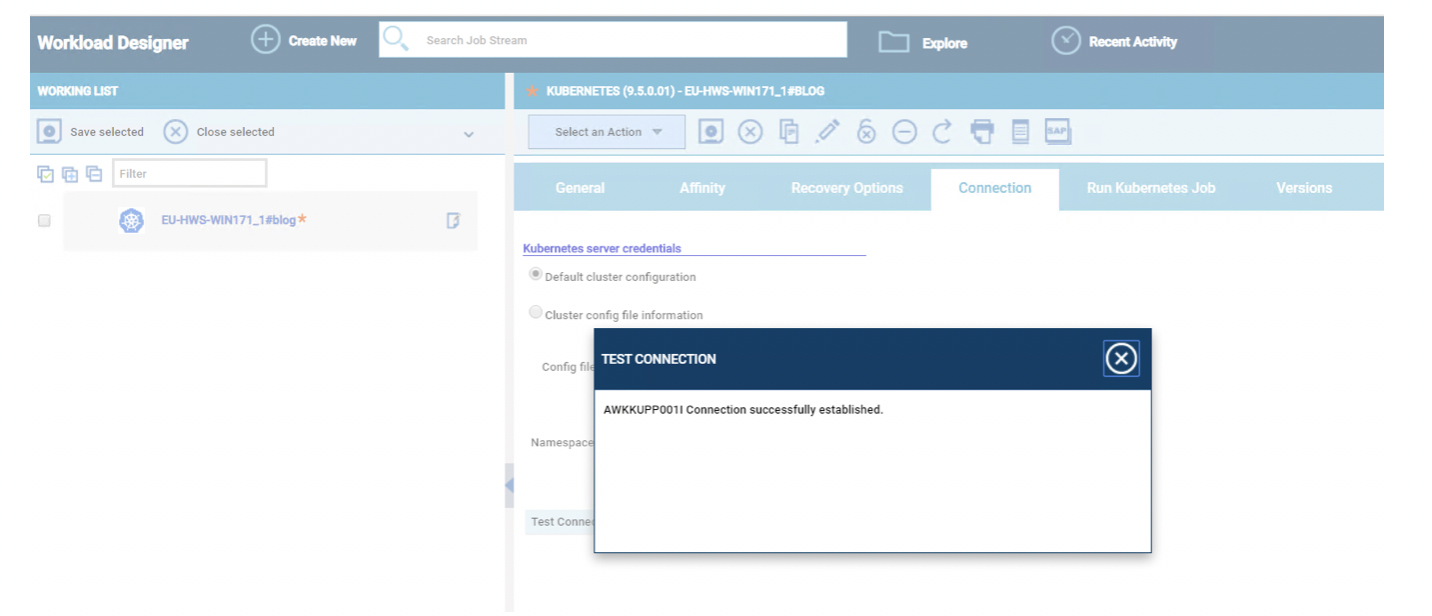

In the job definition interface, select the “Connection” tab and specify the connection parameters to reach your Kubernetes cluster

In the job definition interface, select the “Connection” tab and specify the connection parameters to reach your Kubernetes cluster

Plugin provides two options to establish a connection to the cluster.

- Default cluster configuration: Choose this option to establish a connection to the Kubernetes cluster if the agent is configured/deployed as a service in the K8’s cluster. User can opt for “Default cluster configuration” in which the agent is pre-configured with cluster information.

- Cluster Config file information: Choose this option to establish a connection to a remote K8’s cluster. In this case user needs to provide the path for the config file in the agent with the cluster information either in JSON or YAML format.

Apart from establishing a connection to the cluster, you can also see an option to provide the “Namespace” to which the job needs to be submitted in k8’s cluster.

And surprisingly, this field is not mandatory field. So, in case the user doesn’t specify the value for “Namespace”, plugin tries to fetch the namespace already configured in the below order:

In case you selected the default cluster configuration:

So, the plugin looks for the already configured namespace, if the user has not provided any value in UI. If the namespace is not set prior, then plugin submits the k8’s job to “default” namespace.

This implementation is hiding the complexity coming from security configuration for k8s cluster. Assuming that the security configuration for the namespace granted to be used is well done, from Workload Automation perspective it is just a matter to use WA Security to grant access to use this job plugin and to monitor jobs status.

Run Kubernetes Job:

Once the connection is established to the cluster, now it’s time to submit a k8’s job to the cluster and monitor the job through Kubernetes plugin in WA without the need of switching to the cluster environment and knowing about all the commands of Kubernetes.

WA has made your job hassle-free. Now, you can submit a job to k8’s cluster with just few clicks.

And surprisingly, this field is not mandatory field. So, in case the user doesn’t specify the value for “Namespace”, plugin tries to fetch the namespace already configured in the below order:

In case you selected the default cluster configuration:

- $KUBECONFIG

- $HOME/.kube/config

- /var/run/secrets/kubernetes.io/serviceaccount/namespace

- default namespace

- Namespace defined in the Config file specified in the path field on the Dynamic Workload Console

- default namespace

So, the plugin looks for the already configured namespace, if the user has not provided any value in UI. If the namespace is not set prior, then plugin submits the k8’s job to “default” namespace.

This implementation is hiding the complexity coming from security configuration for k8s cluster. Assuming that the security configuration for the namespace granted to be used is well done, from Workload Automation perspective it is just a matter to use WA Security to grant access to use this job plugin and to monitor jobs status.

Run Kubernetes Job:

Once the connection is established to the cluster, now it’s time to submit a k8’s job to the cluster and monitor the job through Kubernetes plugin in WA without the need of switching to the cluster environment and knowing about all the commands of Kubernetes.

WA has made your job hassle-free. Now, you can submit a job to k8’s cluster with just few clicks.

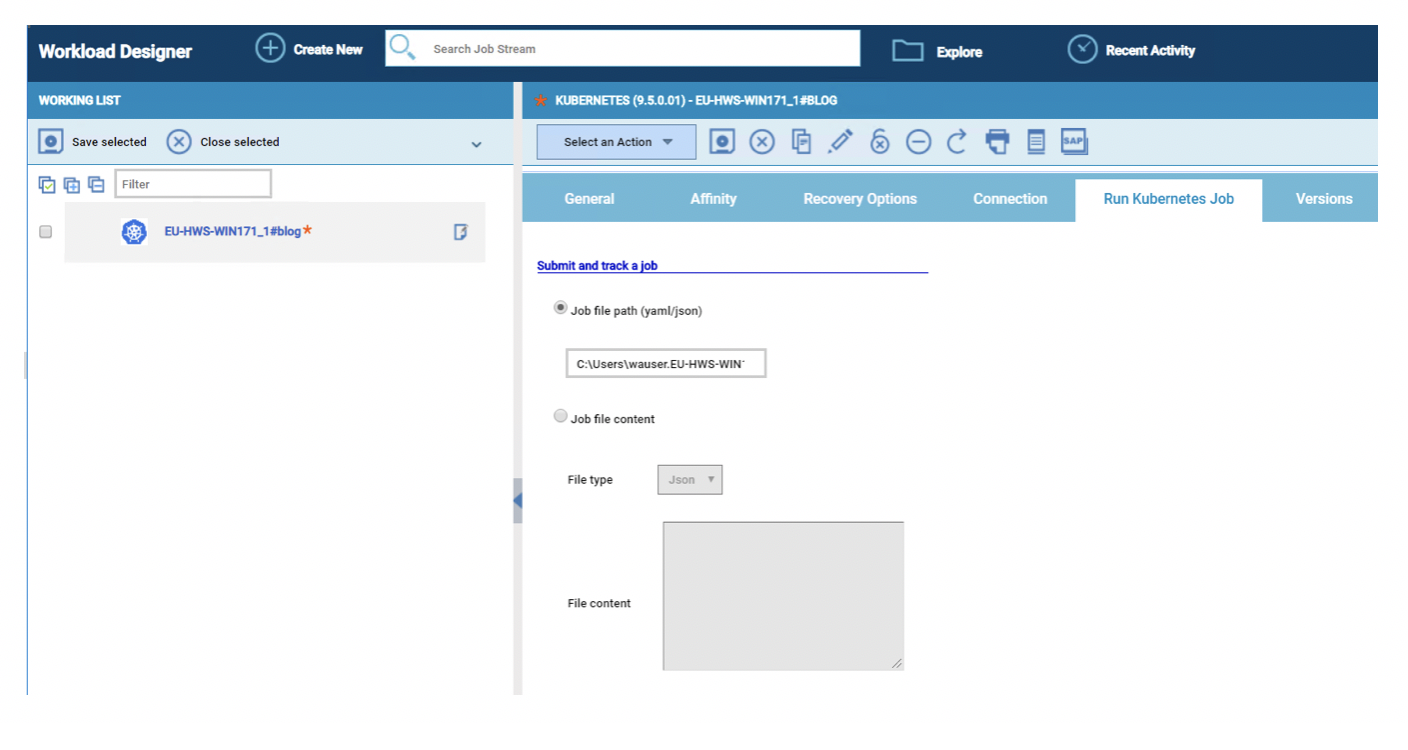

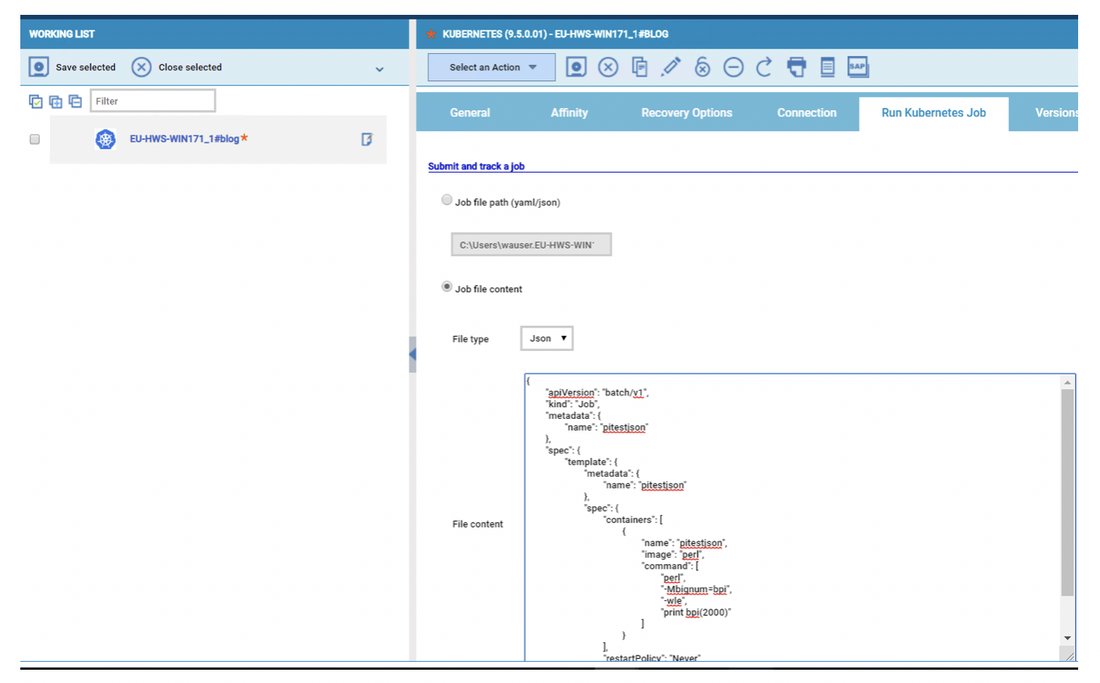

There are two simpler options provided in the plugin to submit a job:

That’s all, you have submitted a job to the Kubernetes cluster through WA in no time.

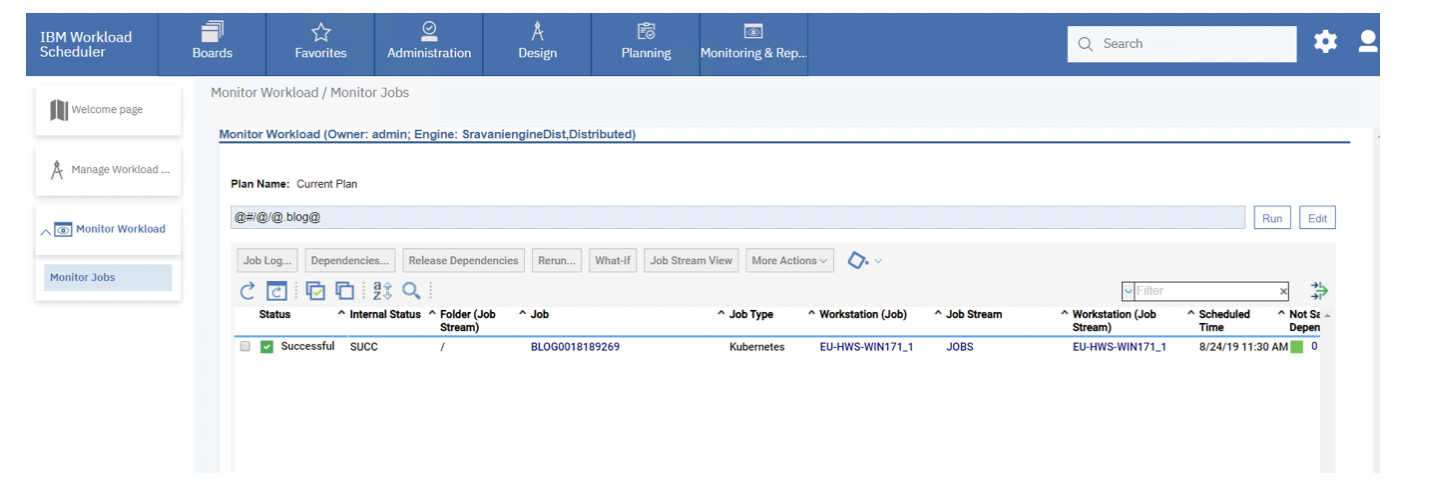

Track/Monitor the Kubernetes Job:

You can also easily monitor the submitted k8’s job in WA through navigating to “Monitor Workload” page.

- If you have the k8’s job content already saved in a file on the agent. Then, you just need to specify the path of the job content on the agent. The file type can be either Json/Yaml.

- Or, if you have the job content handy and don’t want to save to a file on the agent, then just choose the “Job file content” option and copy the content to the text area in the UI.

That’s all, you have submitted a job to the Kubernetes cluster through WA in no time.

Track/Monitor the Kubernetes Job:

You can also easily monitor the submitted k8’s job in WA through navigating to “Monitor Workload” page.

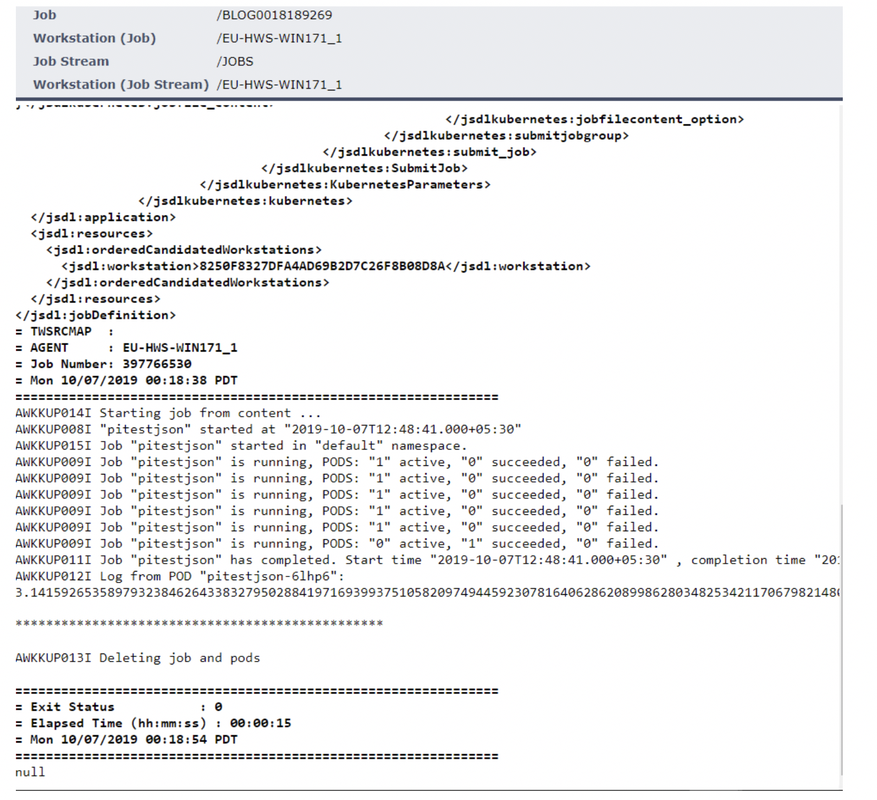

Select the job and click on job log option to view the logs of the Kubernetes job.

Here, you can see that the Kubernetes plugin has submitted the job to the cluster, monitored the job and cleans up the environment (Deleted the job and all the pods associated with the job).

Here, you can see that the Kubernetes plugin has submitted the job to the cluster, monitored the job and cleans up the environment (Deleted the job and all the pods associated with the job).

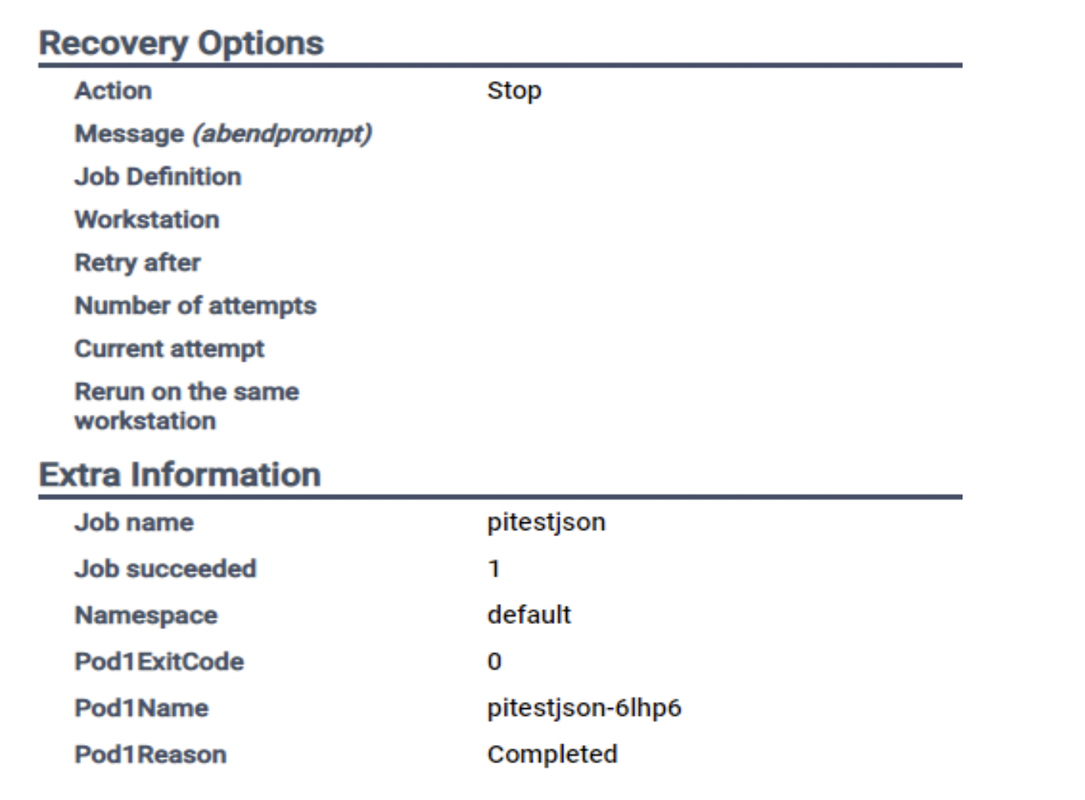

Extra Information:

You can see that there are few “Extra properties” provided by the plug-in which you can use these variables for the next job submission.

You can see that there are few “Extra properties” provided by the plug-in which you can use these variables for the next job submission.

Thus, Kubernetes plugin in Workload Automation is a best fit for those who are looking for complete automation of the jobs connecting with remote cluster or the agent which is deployed on the cluster.

Are you curious to try out the Kubernetes plugin?

Drop us a line at francesco.valentini@hcl.com and become a sponsor user!

Are you curious to try out the Kubernetes plugin?

Drop us a line at francesco.valentini@hcl.com and become a sponsor user!

Sravani Kancherla, Technical Lead at HCL.

Working as full stack developer for the Workload Automation in HCL PNP Bangalore. She has done her Master’s at New Jersey Institute of Technology. Worked across geolocations in USA and India. Worked on various technologies as in Java, Spring, BPMN, Cloud, JavaScript, ReactJS. She Love's to code and be on top of new technologies.

Working as full stack developer for the Workload Automation in HCL PNP Bangalore. She has done her Master’s at New Jersey Institute of Technology. Worked across geolocations in USA and India. Worked on various technologies as in Java, Spring, BPMN, Cloud, JavaScript, ReactJS. She Love's to code and be on top of new technologies.

| Giorgio Corsetti, Workload Automation Test Architect Giorgio works as Performance Test Engineer in the Workload Automation team. In his role, he works to identify bottlenecks in the architecture under analysis assessing how the overall system works managing specific loads and to increase customer satisfaction providing feedbacks about performance improvements when new product releases become available through technical documents publications. Starting from April 2018 he added a new responsibility covering the role of Test Architect for Workload Automation Products family. Giorgio has a degree in Physics and is currently based in the HCL Products and Platforms Rome software development laboratory https://www.linkedin.com/in/giorgio-corsetti-8b13224/ |

| Serena Girardini, Workload Automation Test Technical Leader in distributed env She joined IBM in 2000 as a Tivoli Workload Scheduler developer and she was involved in the product relocation from San Jose Lab to Rome Lab during a short term assignment in San Jose (CA). For 14 years, Serena gained experience in Tivoli Workload Scheduler distributed product suite as developer, customer support engineer, tester and information developer. She covered for a long time the role of L3 fixpack releases Test Team Leader and in this period she was a facilitator during critical situations and upgrade scenarios at customer site. In her last 4 years at IBM she became IBM Cloud Resiliency and Chaos Engineering Test Team Leader. She joined HCL in April, 2019 as expert Tester for IBM Workload Automation product suite and she was recognized as Test Leader for the product porting to the most important Cloud offerings in the market. https://www.linkedin.com/in/serenagirardini/ |